It's Machine Learning, yo

Re: It's Machine Learning, yo

fuck you, my drunk uncle was a saint

Re: It's Machine Learning, yo

Meanwhile, years ago...KingRoyal wrote:It's only a matter of time before Google becomes a see of poorly generated websites

Re: It's Machine Learning, yo

Yeah, it's been bad for a while. But there's been a notable acceleration in the decline of of search result quality since OpenAI started licensing GPT models commercially.

How fleeting are all human passions compared with the massive continuity of ducks.

- Mongrel

- Posts: 21354

- Joined: Mon Jan 20, 2014 6:28 pm

- Location: There's winners and there's losers // And I'm south of that line

Re: It's Machine Learning, yo

So McKinsey released a report predicting that AI "could be worth 4.4 trillion" to the global economy.

Except this is the same McKinsey that also released a report saying the Metaverse could be worth 5 trillion dollars.

As a friend of mine commented "McKinsey is not, I think, trying to actually predict the future here, really."

Except this is the same McKinsey that also released a report saying the Metaverse could be worth 5 trillion dollars.

As a friend of mine commented "McKinsey is not, I think, trying to actually predict the future here, really."

Re: It's Machine Learning, yo

Ugh, had an irritating interaction at work.

My company, famously startup-brained, decided to hire a guy to "make AI happen" at my company. This guy, from MIT, declared himself "Director of AI" and started making a chatbot for our site, powered by Copilot.

Now, my team creates training, documentation, and certification curricula. Because our product is still essentially in its minimum-viable-product phase, it is not safe to work on or within. If you want to, say, change a part, you have to do a lot of things to power off electrical enclosures, lock and tag safety doors, lay down virtual zones to prevent bots from moving in, etc. There's a lot you have to do.

This guy's chat bot just appeared on our site one day, allowing our techs to start plugging in questions to the bot. I was pretty shocked this thing was just deployed like this. I entered into the chat bot, "how do you replace a charge rail", which is a lethally electrical component that you have to do a lot to make safe enough to work on. It returned a 4 step process that, if followed, would kill you. This is because the AI scraped our docs, found the one part in the middle where you stuck your tools in here, and pulled out that snippet as the answer.

Essentially, this MIT dipshit designed this such that it would give "simple answers", which essentially means "answers no more than 5 lines long", so if you ask it a question and the answer is complex (like the 30 page document it scraped from), it will just find the one 5-line nugget it thinks is the answer and present that.

I met with the guy to talk this out, and he was just flat-out unable to [A] listen to me, [B] conceive of the possibility that the tool could be unsafe in its current state, and my favorite part, [C] unwilling to even entertain changes, because "I am blocking a tool that can save hundreds of thousands of dollars". I was just like, "well, it's worthless if it's unsafe, right? Who gives a fuck how much money it saves if it gets someone killed?" and yeah it was just an entirely unproductive exchange.

I escalated, got the director-level to agree that this was a problem, got my director to talk to his director, etc etc, and got it removed, so it is now in a "testing state" and "will not be released until it goes through a thorough certification review", but like, of course I do not trust that these people will do the responsible thing here. I will have to be watching this shit like a hawk and raise the red flag if it goes up again.

People want magical AI solutions so bad, they want to use this new technology so bad, and it drives me nuts that no responsibility for the dangers is heeded, because of the excitement. Fuck off with that.

Also: This is like the fourth time in my career I've worked with someone from MIT, and they're all incredibly shitty at actually making something that's good, because they've all been convinced they are the smartest motherfucker on Earth and cannot take feedback or, god forbid, criticism. They're typically very knowledgeable about one thing, love to hear themselves talk, and have just never learned how to work with other people. Incredibly useless, entitled fuckos to have to endure working around.

My company, famously startup-brained, decided to hire a guy to "make AI happen" at my company. This guy, from MIT, declared himself "Director of AI" and started making a chatbot for our site, powered by Copilot.

Now, my team creates training, documentation, and certification curricula. Because our product is still essentially in its minimum-viable-product phase, it is not safe to work on or within. If you want to, say, change a part, you have to do a lot of things to power off electrical enclosures, lock and tag safety doors, lay down virtual zones to prevent bots from moving in, etc. There's a lot you have to do.

This guy's chat bot just appeared on our site one day, allowing our techs to start plugging in questions to the bot. I was pretty shocked this thing was just deployed like this. I entered into the chat bot, "how do you replace a charge rail", which is a lethally electrical component that you have to do a lot to make safe enough to work on. It returned a 4 step process that, if followed, would kill you. This is because the AI scraped our docs, found the one part in the middle where you stuck your tools in here, and pulled out that snippet as the answer.

Essentially, this MIT dipshit designed this such that it would give "simple answers", which essentially means "answers no more than 5 lines long", so if you ask it a question and the answer is complex (like the 30 page document it scraped from), it will just find the one 5-line nugget it thinks is the answer and present that.

I met with the guy to talk this out, and he was just flat-out unable to [A] listen to me, [B] conceive of the possibility that the tool could be unsafe in its current state, and my favorite part, [C] unwilling to even entertain changes, because "I am blocking a tool that can save hundreds of thousands of dollars". I was just like, "well, it's worthless if it's unsafe, right? Who gives a fuck how much money it saves if it gets someone killed?" and yeah it was just an entirely unproductive exchange.

I escalated, got the director-level to agree that this was a problem, got my director to talk to his director, etc etc, and got it removed, so it is now in a "testing state" and "will not be released until it goes through a thorough certification review", but like, of course I do not trust that these people will do the responsible thing here. I will have to be watching this shit like a hawk and raise the red flag if it goes up again.

People want magical AI solutions so bad, they want to use this new technology so bad, and it drives me nuts that no responsibility for the dangers is heeded, because of the excitement. Fuck off with that.

Also: This is like the fourth time in my career I've worked with someone from MIT, and they're all incredibly shitty at actually making something that's good, because they've all been convinced they are the smartest motherfucker on Earth and cannot take feedback or, god forbid, criticism. They're typically very knowledgeable about one thing, love to hear themselves talk, and have just never learned how to work with other people. Incredibly useless, entitled fuckos to have to endure working around.

- Mongrel

- Posts: 21354

- Joined: Mon Jan 20, 2014 6:28 pm

- Location: There's winners and there's losers // And I'm south of that line

Re: It's Machine Learning, yo

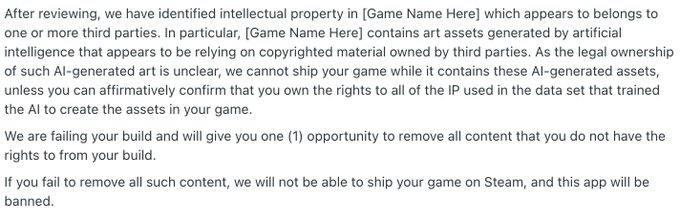

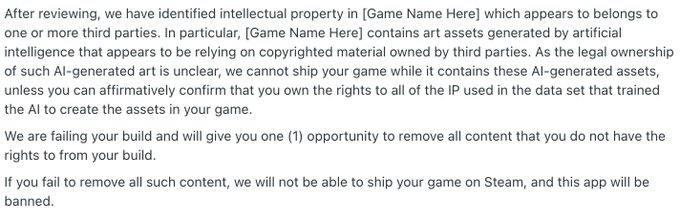

Could also go in the game news thread or copyright thread.

One of the interesting twist on all the high-volume AI-generated trash is that legally, it's actually impossible to copyright AI-produced material. This all hinges on a single ruling made years ago in a case you might recall, which was over who owned the copyright to this picture:

The most relevant bit being that

As a result of this determination, only an accident of happenstance (but a good one, IMO!), and only a few years ago, AI-produced content cannot be copyrighted in the US (and by extension of US hegemony, globally in any practical sense).

Why mention this?

Well, Valve just instituted a policy resting on this very real concern, whereby games with AI-generated content for which the owners cannot prove they also have copyright or legal use of the full data set any such AI work was trained on (which would otherwise be flooding Steam with trash, which was actually happening) will be removed from the Steam platform:

One of the interesting twist on all the high-volume AI-generated trash is that legally, it's actually impossible to copyright AI-produced material. This all hinges on a single ruling made years ago in a case you might recall, which was over who owned the copyright to this picture:

The most relevant bit being that

In December 2014, the United States Copyright Office stated that works created by a non-human, such as a photograph taken by a monkey, are not copyrightable.

As a result of this determination, only an accident of happenstance (but a good one, IMO!), and only a few years ago, AI-produced content cannot be copyrighted in the US (and by extension of US hegemony, globally in any practical sense).

Why mention this?

Well, Valve just instituted a policy resting on this very real concern, whereby games with AI-generated content for which the owners cannot prove they also have copyright or legal use of the full data set any such AI work was trained on (which would otherwise be flooding Steam with trash, which was actually happening) will be removed from the Steam platform:

Re: It's Machine Learning, yo

Mongrel wrote:One of the interesting twist on all the high-volume AI-generated trash is that legally, it's actually impossible to copyright AI-produced material. This all hinges on a single ruling made years ago in a case you might recall

[...]

The most relevant bit being thatIn December 2014, the United States Copyright Office stated that works created by a non-human, such as a photograph taken by a monkey, are not copyrightable.

I don't really agree with the importance you're placing on the monkey-selfie case (and it seems contradictory to simultaneously claim that it "all hinges on a single ruling" from 2016 and "the most relevant bit" is a statement by the Copyright Office in 2014), but yes, that particular round of frivolous litigation did lead to the courts and the Copyright Office expressly stating something that they'd previously just kind of assumed everybody already understood, that copyrights are for creative works by human beings.

Additionally, I'd say that even in the hypothetical event that AI-generated content were subject to copyright, that wouldn't, in itself, resolve the potential issues with using training data that somebody else owns a copyright on. I think the more relevant caselaw there would be music sampling.

- Mongrel

- Posts: 21354

- Joined: Mon Jan 20, 2014 6:28 pm

- Location: There's winners and there's losers // And I'm south of that line

Re: It's Machine Learning, yo

Thad wrote:Mongrel wrote:One of the interesting twist on all the high-volume AI-generated trash is that legally, it's actually impossible to copyright AI-produced material. This all hinges on a single ruling made years ago in a case you might recall

[...]

The most relevant bit being thatIn December 2014, the United States Copyright Office stated that works created by a non-human, such as a photograph taken by a monkey, are not copyrightable.

I don't really agree with the importance you're placing on the monkey-selfie case (and it seems contradictory to simultaneously claim that it "all hinges on a single ruling" from 2016 and "the most relevant bit" is a statement by the Copyright Office in 2014), but yes, that particular round of frivolous litigation did lead to the courts and the Copyright Office expressly stating something that they'd previously just kind of assumed everybody already understood, that copyrights are for creative works by human beings.

Additionally, I'd say that even in the hypothetical event that AI-generated content were subject to copyright, that wouldn't, in itself, resolve the potential issues with using training data that somebody else owns a copyright on. I think the more relevant caselaw there would be music sampling.

Well, the photo was back in 2011 means that the 2014 and 2016 decisions didn't necessarily follow from each other, that was two separate branches.

But by "ruling" I meant the Copyright Office's statement. Imprecise wording on my part.

In any case, I think you know how much the current American legal and legislative environment values things which are "assumed everybody already understood". So yes, I do think the fact that someone having made a formal decision setting an explicit precedent did and does matter quite a lot.

Re: It's Machine Learning, yo

‘It’s destroyed me completely’: Kenyan moderators decry toll of training of AI models

During all the tech hype around these chatbots, the fact that they requires thousands of people marking answers right or wrong was convenient left out. And like all moderation, it falls on teams of poorly treated workers to take the bullet of truly awful stuff to keep it from reaching our eyes

The 27-year-old said he would would view up to 700 text passages a day, many depicting graphic sexual violence. He recalls he started avoiding people after having read texts about rapists and found himself projecting paranoid narratives on to people around him. Then last year, his wife told him he was a changed man, and left. She was pregnant at the time. “I lost my family,” he said.

During all the tech hype around these chatbots, the fact that they requires thousands of people marking answers right or wrong was convenient left out. And like all moderation, it falls on teams of poorly treated workers to take the bullet of truly awful stuff to keep it from reaching our eyes

signature

- Brantly B.

- Woah Dangsaurus

- Posts: 3679

- Joined: Mon Jan 20, 2014 2:40 pm

Re: It's Machine Learning, yo

I don't know if the manpower involved in filtering training data was ever downplayed (and if you get into this stuff yourself, you learn very quickly how much manpower is really involved there). The fact that it was offloaded to underpaid foreign temps was underplayed, but in retrospect it's kinda obvious a for-profit company would do that.

It's an open secret that viewing the internet as a whole is going to expose you to a whole bunch of stuff that you don't ever want to see. These guys are a lot luckier than, say, social media mods in a way: they're not watching things play out in real time, with limited ability to do anything about it.

My personal current thrust (I don't want to say "interest") is actually toward using ML to detect imminent tragedies, since I'm sitting right on the balance point of "terrible things have happened to people I know on social media" and "I do not want a bunch of Kenyans reading my shit, even for good reasons" (with a dash of "I'm probably more inured to working with this sort of bullshit than most people").

It's an open secret that viewing the internet as a whole is going to expose you to a whole bunch of stuff that you don't ever want to see. These guys are a lot luckier than, say, social media mods in a way: they're not watching things play out in real time, with limited ability to do anything about it.

My personal current thrust (I don't want to say "interest") is actually toward using ML to detect imminent tragedies, since I'm sitting right on the balance point of "terrible things have happened to people I know on social media" and "I do not want a bunch of Kenyans reading my shit, even for good reasons" (with a dash of "I'm probably more inured to working with this sort of bullshit than most people").

Re: It's Machine Learning, yo

Brentai wrote:I don't know if the manpower involved in filtering training data was ever downplayed (and if you get into this stuff yourself, you learn very quickly how much manpower is really involved there).

I don't know if "downplayed" is the right word but it does seem like a detail I don't see mentioned much in most mainstream articles on the subject. And I don't know if the average person thinks much about the connection between those "click on the cars" verification systems and Waymo.

Silicon Valley did an episode where Dinesh had to look at dick pics all day to train an AI to block them. On the one hand, I think it did a good job of explaining the process in a way laypeople could understand it; on the other, reducing it to dick jokes kinda trivialized the genuine trauma involved.

Brentai wrote:My personal current thrust (I don't want to say "interest") is actually toward using ML to detect imminent tragedies, since I'm sitting right on the balance point of "terrible things have happened to people I know on social media" and "I do not want a bunch of Kenyans reading my shit, even for good reasons" (with a dash of "I'm probably more inured to working with this sort of bullshit than most people").

Yeah, that's a use that has massive positive implications but comes with worse-than-usual privacy implications at scale. We could use more people looking into ethical implementations; good on ya.

- Brantly B.

- Woah Dangsaurus

- Posts: 3679

- Joined: Mon Jan 20, 2014 2:40 pm

Re: It's Machine Learning, yo

In the, um, month since I expressed optimism about the power requirements of ML I need to double back on that since the New Tech Arms Race has definitely pushed those power requirements from "reasonable" to "uh oh" territory almost immediately.

It's still never going to be remotely comparable to Bitcoin, which both required and encouraged *PUs to run at max capacity for infinite time, but it's a bad trend for sure.

It's still never going to be remotely comparable to Bitcoin, which both required and encouraged *PUs to run at max capacity for infinite time, but it's a bad trend for sure.

Re: It's Machine Learning, yo

Report: Potential NYT lawsuit could force OpenAI to wipe ChatGPT and start over

The headline is some Ashley Belanger clickbait nonsense, but I've been thinking about the story in the aggregate and, some stray thoughts:

I've got plenty of concerns about LLMs but I really don't think copyright is the correct mechanism for addressing them.

Analysis of whether or not a work infringes copyright should be based on the work itself, the final result, not the means it used to get there.

Under no circumstances should scraping, in itself, be considered copyright infringement. The consequences of such a ruling would be staggering and intensely negative. Even assuming they did it in a way that managed not to make search engines illegal, there are all kinds of things that ML can be used for besides building shitty content farm sites. (If I'm developing an OCR app, should I be required to get a license for every proprietary typeface in its training set?)

Yes, there is a serious looming problem with companies using AI as an excuse to pay workers less. At some point we as a society need to accept that this is a capitalism problem and address it on those terms. We need stronger unions, worker protections, and a social safety net. Believing, in 2023, that expanding copyright will help the artists creating things rather than the big-media publishers who own them is some Lucy-with-the-football-ass shit.

I think, on balance, having a robots.txt-style means of opting out of scraping is a good idea, but that complying should be an etiquette thing rather than a legal thing (just like robots.txt). I also think that sites should really think about unintended consequences before they say "no scraping" -- again, there are lots of valid uses for that information. Remember all those researchers who've been shut out of analyzing Twitter data because Musk started charging exorbitant amounts for API access? That's the kind of thing you'd be blocking if you put out a blanket ban on scraping a site for training data.

Last thought: if scraped content does end up being a copyright issue, could copyleft be a remedy? Some kind of license that says "you can freely use this data in your app provided you release your source code and aggregate training data under the same terms"?

The headline is some Ashley Belanger clickbait nonsense, but I've been thinking about the story in the aggregate and, some stray thoughts:

I've got plenty of concerns about LLMs but I really don't think copyright is the correct mechanism for addressing them.

Analysis of whether or not a work infringes copyright should be based on the work itself, the final result, not the means it used to get there.

Under no circumstances should scraping, in itself, be considered copyright infringement. The consequences of such a ruling would be staggering and intensely negative. Even assuming they did it in a way that managed not to make search engines illegal, there are all kinds of things that ML can be used for besides building shitty content farm sites. (If I'm developing an OCR app, should I be required to get a license for every proprietary typeface in its training set?)

Yes, there is a serious looming problem with companies using AI as an excuse to pay workers less. At some point we as a society need to accept that this is a capitalism problem and address it on those terms. We need stronger unions, worker protections, and a social safety net. Believing, in 2023, that expanding copyright will help the artists creating things rather than the big-media publishers who own them is some Lucy-with-the-football-ass shit.

I think, on balance, having a robots.txt-style means of opting out of scraping is a good idea, but that complying should be an etiquette thing rather than a legal thing (just like robots.txt). I also think that sites should really think about unintended consequences before they say "no scraping" -- again, there are lots of valid uses for that information. Remember all those researchers who've been shut out of analyzing Twitter data because Musk started charging exorbitant amounts for API access? That's the kind of thing you'd be blocking if you put out a blanket ban on scraping a site for training data.

Last thought: if scraped content does end up being a copyright issue, could copyleft be a remedy? Some kind of license that says "you can freely use this data in your app provided you release your source code and aggregate training data under the same terms"?

Re: It's Machine Learning, yo

This is just bugging me for some reason: Google Image Search for "Tianamen Square Tank Man" Turns Up AI-Generated Selfie.

By my clicking around, it shows up higher or lower by computer logic I won't pretend to understand. Either way, it sucks and I hate it, and I feel you should hate it too. I feel I should also note it doesn't show up in a DuckDuckGo image search, but I couldn't tell you if that's because it's less garbage or just less thorough.

By my clicking around, it shows up higher or lower by computer logic I won't pretend to understand. Either way, it sucks and I hate it, and I feel you should hate it too. I feel I should also note it doesn't show up in a DuckDuckGo image search, but I couldn't tell you if that's because it's less garbage or just less thorough.

: Mention something from KPCC or Rachel Maddow

: Mention something from KPCC or Rachel Maddow : Go on about Homeworld for X posts

: Go on about Homeworld for X posts- beatbandito

- Posts: 4308

- Joined: Tue Jan 21, 2014 8:04 am

Re: It's Machine Learning, yo

Trudeau's butt on the left is so good it's almost better than the one in black-ass.

Re: It's Machine Learning, yo

Can anyone clarify the OpenAI/Microsoft weirdness of the last week or so?

OpenAI fired their CEO and Microsoft snapped him up? He's stealing a bunch of OpenAI people?

I've seen a few people talk about how this is a secret fight between EA and E/ACC guys, but that just sounds like a lot of jerking off. Usual people screaming equal parts doom and elation at the AGI coming around the corner.

Is this just investor nonsense?

OpenAI fired their CEO and Microsoft snapped him up? He's stealing a bunch of OpenAI people?

I've seen a few people talk about how this is a secret fight between EA and E/ACC guys, but that just sounds like a lot of jerking off. Usual people screaming equal parts doom and elation at the AGI coming around the corner.

Is this just investor nonsense?

Re: It's Machine Learning, yo

That's kinda what I'm getting out of it.

I don't know nearly enough about OpenAI company politics to make sense of it, but I saw some commentary to the effect of "think of it less like a regular company and more like a cult" and I think that's the take I'm going to go with.

I don't know nearly enough about OpenAI company politics to make sense of it, but I saw some commentary to the effect of "think of it less like a regular company and more like a cult" and I think that's the take I'm going to go with.

Who is online

Users browsing this forum: No registered users and 17 guests